|

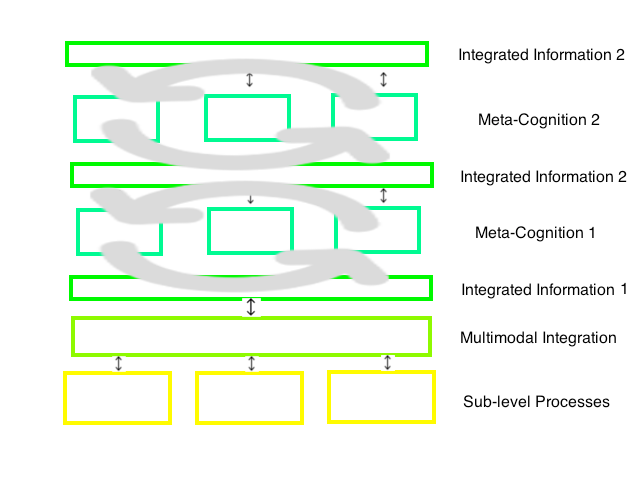

I feel that academic researchers from different communities (e.g. psychology, computer science, and philosophy) have different expectations and targets to build an intelligent machine and we sometimes can even not find the common definitions about intelligence and its connection to cognition and consciousness itself. Here I would like to briefly introduce my own idea about the cognitive roadmap towards intelligence machine. Here we mean an intelligent machine is an autonomous machine which can finish any tasks assigned to it, or assist people in an autonomous way. This kind of machine is usually implemented with Artificial General Intelligence (AGI). One prerequisite is the acquisition of global understanding, which can be achieved by the following steps: A. Information integration of multi-modal channels. Recent research [1,2] mostly focus on multi-modal learning on sub-networks. The most interesting part in [1] is that the learning mimics the brain cross-modality function. In [1], the semantic information are not explicitly trained on the higher layers, but it emerges as pre-symbolic concepts in an unsupervised way. This work, to some extent, suggests that we may be able to reach the level B. B. Understanding the sensory information by integrating such information which comes from different modalities and sensorimotor processes [3]. In here, “understanding” (or grounding) means that the resulting (pre-) symbolic representation that can be further processed for some higher level cognitive processes, e.g. reasoning, inferencing. C. Such integrated information can be globally accessed by different sub-levels of tasks [4]. And specifically, there exist the top-down processes in which the integrated information also plays a top-down enrichment for the sub-level of cognitive processes, through body exploration (e.g. we control your body to touch a cup on the table to make sure what is its texture, to eliminate the uncertainties of the integrated information), anticipation etc. Besides, on top of the integrated information, similar top-down processes also exists another prerequisite: higher-level of cognition (meta-cognition). This is a higher level of processes about understanding, deduction and management of the integrated information from the lower levels. For instance, in the previous example of touching the cup, there exists a confidence level about its perception of the texture in the meta-cognitive level. In the context of intelligent machines, such level should accomplish a few tasks, among others: A. To coordinate the sublevels (intelligent modules or sub-networks) to adaptively survive/finish goals (e.g. to help the hosts). B. Make interaction/reasoning/transfer-learning between sub-levels (probably from the integrated information). C. To evaluate of self-confidence of sub-level processes and steps A and B. We also hypothesize that the two prerequisites (global understanding and meta-cognition) stack in a hierarchical way and form more and more abstract of our intelligent processes, as well as the intelligent machines. While the abstractness of the integrated information, the subjective experience is formed. While the formality of the meta-cognition emerges, the introspection [6] is formed. Another unavoidable question, in the road toward building intelligent machines, is that should we build a conscious machine? In my opinion, the current artificial intelligence methods (e.g. deep CNN) are unconscious or semi-consciousness (AlphaGo or machine translation). This kind of machines have abilities to finish a/some task(s) autonomously and efficiently. But they do not have shared information or meta-cognition to control such tasks. Although we are not sure if we can build an artificial conscious machine due to the moral problems. For the sake of research, from the proposed idea about the intelligent machine, I would argue that there are no boundaries in technologies or our mind between the Intelligence and consciousness: A. The integrated information has been a necessity measurement of consciousness in theory, according to the IIT theory. This is only one step away from the subjective experience for the machines. Although the “hard problem” [7] of interpreting the subjective experience of other animals is still impractical to solve for scientists to understand with objective experiments, I argue that it is still possible for the machines to form a simple subjective experience. B. As we mentioned, the meta-cognition can also result in the introspection ability. To endow extension to this architecture, an adaption/self-organized method should be used to grow the hierarchical architecture of meta-cognition. Similar to the deep learning architecture, with the increase of abstraction of information, the self-confidence monitor on the meta-cognition level may become a monitor of the complete system as a whole, i.e. the introspection ability emerges. With the recent critics towards the interpretability of current deep-learning, more and more research has been focusing on using hybrid or statistical methods/architectures. This is one reasonable movement toward the intelligent machines. Although building intelligent machines may take another decade, we need to concern is that how we should distinguish the boundary between Artificial Intelligence and Artificial Consciousness? Should we ultimately build an Artificial Conscious Machine? [1] Aytar, Yusuf, Carl Vondrick, and Antonio Torralba. "See, Hear, and Read: Deep Aligned Representations." arXiv preprint arXiv:1706.00932 (2017). [2] Kaiser, Lukasz, et al. "One Model To Learn Them All." arXiv preprint arXiv:1706.05137 (2017). [3] Harnad, Stevan. "Symbol grounding problem." Scholarpedia 2.7 (2007): 2373. [4] Baars, Bernard J. A cognitive theory of consciousness. Cambridge University Press, 1993. [5] Meyniel, Florent, Daniel Schlunegger, and Stanislas Dehaene. "The sense of confidence during probabilistic learning: A normative account." PLoS computational biology 11.6 (2015): e1004305. [6] Overgaard, Morten. "Introspection." Scholarpedia 3.5 (2008): 4953. [7] Chalmers, David J. "Facing up to the problem of consciousness." Journal of consciousness studies 2.3 (1995): 200-219.

0 Comments

|

Details

AuthorWrite something about yourself. No need to be fancy, just an overview. ArchivesCategories |

RSS Feed

RSS Feed